AI-generated deepfake media is a growing threat to international security, yet

deepfakes may also hold promise for counterterrorism. Through smart policies, public awareness campaigns and technical countermeasures, the threat

of deepfakes may be mediated, while the promise harnessed responsibly.

Deepfakes of celebrities including Taylor Swift, Elon Musk and the stars of Dragons’ Den have been created to promote everything from kitchenware to crypto scams and diet pills. Last year, a British man lost £76,000 to a deepfake scam where Martin Lewis, the founder of Money Saving Expert, appeared to be promoting a non-existent bitcoin investment scheme. The scammers have now one crucial advantage, said Nick Stapleton, presenter of the award-winning BBC series Scam Interceptors and author of the book How to Beat Scammers.

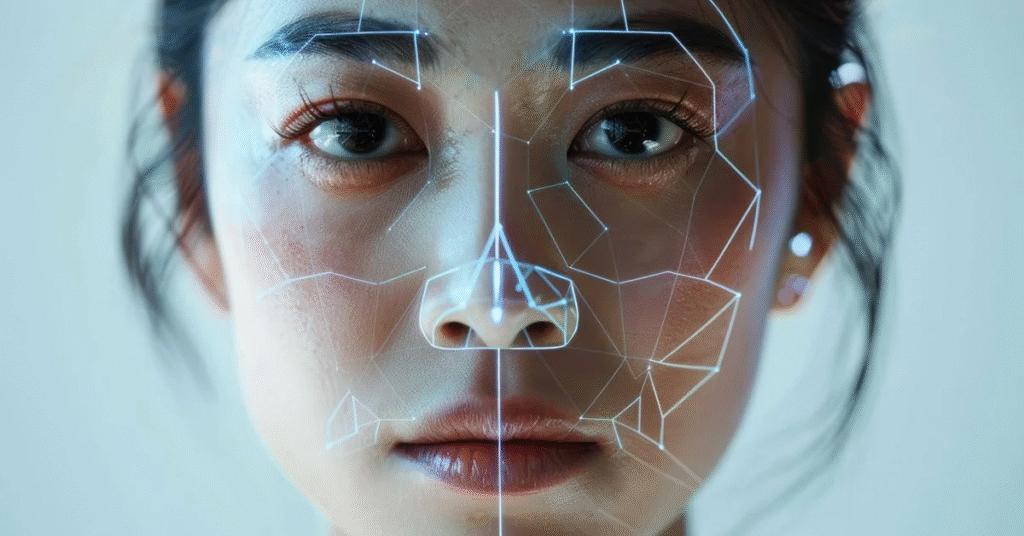

How do you spot a DeepFake? How good are DeepFake videos? How well can ordinary people tell the difference between a video manipulated by AI and a normal, non-altered video? Rather than try to explain in words, we built the Detect Fakes website so you can see the answer for yourself. Detect Fakes is a research project designed to answer these questions and identify techniques to counteract AI-generated misinformation. It turns out there are many subtle signs that a video has been algorithmically manipulated.

Disinformation and hoaxes have evolved from mere annoyance to warfare that can create social discord, increase polarisation, and in some cases, even influence the election outcome. Nation-state actors with geopolitical aspirations, ideological believers, violent extremists, and economically motivated enterprises can manipulate social media narratives with easy and unprecedented reach and scale. The disinformation threat has a new tool in the form of deepfakes.

“Deepfake” technology, which has progressed steadily for nearly a decade, has the ability to create talking digital puppets. The A.I. software is sometimes used to distort public figures, like a video that circulated on social media last year falsely showing Volodymyr Zelensky, the president of Ukraine, announcing a surrender. But the software can also create characters out of whole cloth, going beyond traditional editing software and expensive special effects tools used by Hollywood, blurring the line between fact and fiction to an extraordinary degree.